✨ AI Kontextualisierung

Simply leaning back instead of having to pay attention to traffic: That is the vision of autonomous driving. This is intended not only to make traveling more pleasant for the passengers, but also to make it safer than having one person at the steering wheel, when distractions and human errors are the leading cause of fatalities. Clearly, in order to successfully tackle the driving task, Autonomous Driving Systems (ADS) must be able to recognize objects, assess situations correctly, and master driving skills.

+++How software helps to reduce human driving errors+++

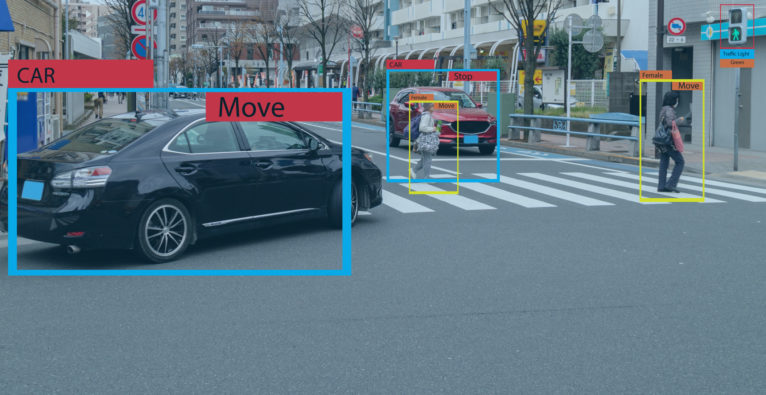

This process takes place in several stages. In the first phase, the object detection must determine where an object is located at all. In the second step, a detected object is then classified: It is determined whether it is, for example, a vehicle, an adult, a child or an animal – because a child behaves differently from an adult, for example. Finally, the system must carry out the so-called “tracking”. This involves analyzing where the object was in the past and where it is now – in order to draw conclusions about where the object will probably be next.

Separating the data wheat from the data chaff

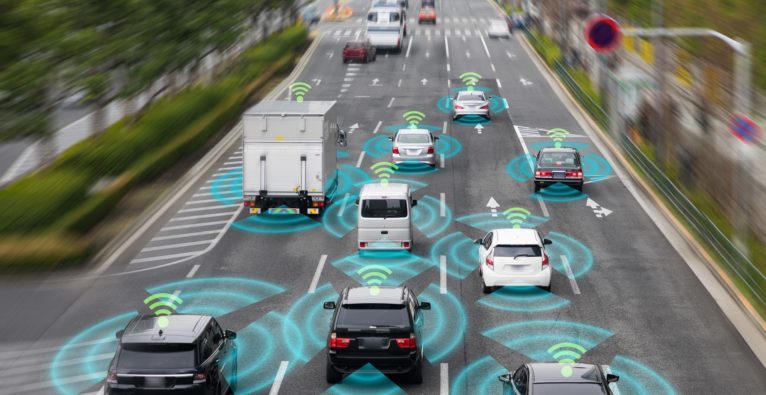

Self-driving cars use data from various sensors installed in the vehicle – such as cameras or LiDAR sensors, which measure the distance between the objects and the car. These sensors produce countless amounts of data – and it is precisely this data that must be correctly classified so that the AI can identify which part of it is relevant to safety and which is not.

This is where the Silicon-Valley company Deepen.AI comes into play. Deepen has developed technology for better detection and segmentation of object data in road traffic in cooperation with AVL, based in Graz. First results of this cooperation were presented at the CES 2020 in Las Vegas.

PoC with AVL for the future of autonomous driving

Deepen.AI, founded by three former Google employees, is about the aforementioned challenge of providing ADSs a correct understanding of the world surrounding them. To achieve this, AI needs some human help in order to be effectively trained to make correct inferences. That’s why, in addition to its 17 full-time employees, Deepen.AI works with around 250 people in India who clean up the data collected by the sensors and teach AI to recognize things: For example, they mark when the AI has overlooked a side mirror on a car or misclassified objects. “These data analysts clear up doubts that the AI has about some objects,” explains Mohammad Musa, Co-Founder and CEO of Deepen.AI: “They help with classification and calibration.”

This very deep focus on data integrity is also the context of the PoC developed jointly with AVL. “It is important for AVL to have correctly annotated data at pixel and point level,” explains Thomas Schlömicher, Research Engineer ADAS at AVL. Ideally, the cooperation should result in a complete “Data Intelligence Pipeline”, which will be used by AVL’s numerous B2B customers to annotate their data and thus jointly shape the future capabilities of autonomous driving.

“Safety Pool” as next step after PoC

“Together” is also the keyword behind the joint goal that the partners want to pursue after the successful PoC. One big challenge affecting the industry is that the various car manufacturers are currently pursuing different paths, each one using its own proprietary approach. “But the industry needs standards,” says Musa: “That is the basis for everyone to trust the safety of the systems.”

Therefore, “Safety Pool™, (www.safetypool.ai) a project led by Deepen and the World Economic Forum, has the goal to define quantified benchmarks and uniform descriptions of driving situations, which will then serve not only as standards for the industry, but also as a solid backbone to derive consensus-driven safety assessments and frame regulations. This will bring society one significant step closer to benefitting from the revolutionary capabilities of automated driving technologies.